While everyone was eagerly awaiting GPT-5, OpenAI threw a curveball on September 12, 2024, by releasing OpenAI o1.

In a surprising move, OpenAI reset the numbering back to 1 and called it OpenAI o1, emphasizing a new focus on reasoning compared to the typical GPT series. This signals the beginning of the OpenAI o-series, set to stand alongside the more familiar GPT models we’ve all come to know.

The first version, o1-preview, is already making waves by excelling on standard benchmarks in fields like mathematics, coding, and puzzle-solving.

Furthermore, o1 represents a shift in how large learning models (LLMs) are trained, dedicating more computational resources to both training and inference phases.

However, o1 isn’t designed to replace GPT-4o in every instance. For tasks involving image inputs, function calling, or those requiring quicker response times, the GPT-4o and GPT-4o mini models will still be the better options.

Let’s dive into what makes the new O1 models unique!

How OpenAI o1 Works

When you first interact with o1, you’ll notice that it takes a bit longer to generate responses compared to GPT-4o. This extra time reflects its focus on reasoning. O1 spends more time “thinking” before answering, which allows it to handle more complex tasks like solving difficult problems in logic, math, coding, and science.

Tackling the Monty Hall Problem

OpenAI o1-preview is well-suited for puzzles like the Monty Hall problem. Its impressive reasoning capabilities are driven by two core methods: reinforcement learning and chain-of-thought reasoning.

Reinforcement Learning and Chain-of-Thought

O1’s reasoning edge comes from a blend of reinforcement learning and chain-of-thought reasoning.

Reinforcement learning helps the model improve its thought process, learning from mistakes and refining strategies over time to find the best, most logical solutions.

Chain-of-thought reasoning allows o1 to break down complex problems into smaller, more digestible steps. It’s like carefully planning a recipe before cooking, ensuring that no step is overlooked. By mapping out its reasoning explicitly, o1 can catch errors early, just like we do when we carefully plan our tasks.

This reasoning method is particularly effective for fields like math, science, and coding, where problems often require multiple steps to arrive at the correct solution.

A New Approach to Compute Allocation

One of the standout features of OpenAI o1 is how it reallocates computational resources. Traditional LLMs focus heavily on pretraining datasets, but o1 shifts this emphasis to the training and inference phases.

This strategic shift reveals that dedicating more computational power to these stages can significantly improve the model’s reasoning skills.

The chart below illustrates how increased compute resources directly improve o1’s accuracy, especially during testing.

OpenAI o1 Accuracy vs. Compute Power

The chart shows that as computational power increases, so does o1’s accuracy in tackling the AIME (American Invitational Mathematics Examination) problems on the first try. The trend is particularly noticeable during the testing phase, where more “thinking time” leads to better performance.

This observation highlights the compute-heavy nature of o1 and suggests that more resources could further enhance its accuracy, pointing to exciting potential for future advancements in AI reasoning.

OpenAI o1 Benchmarks: Excelling in Reasoning-Heavy Tasks

To highlight o1’s enhanced reasoning abilities, OpenAI tested it on various tough math, coding, and science benchmarks, and the results were impressive.

Human Exams

In human exam-style tests, o1 consistently outperformed GPT-4o.

One of the most noticeable differences was the huge leap in performance between GPT-4o and o1, especially in math and coding. While the improvement in science was more subtle, both o1-preview and the fully optimized o1 outperformed human experts on PhD-level science questions. This shows that o1 has the potential to solve real-world problems and even surpass human performance in some areas.

ML Benchmarks

Looking at machine learning benchmarks, o1’s advancements are clear. On both MathVista and MMLU, o1 showcased significant gains in accuracy over GPT-4o.

A specialized version of o1, called o1-ioi, showed off its coding skills by ranking in the 49th percentile in the 2024 International Olympiad in Informatics under strict competition conditions. In simulated contests, o1-ioi performed even better, outscoring 93% of participants.

OpenAI o1 Use Cases

O1’s strong reasoning capabilities make it a perfect fit for tackling complex issues in fields like science, coding, and mathematics.

Scientific Research

In healthcare, for example, researchers could use o1 to annotate complex cell sequencing data, while physicists might apply it to generate advanced mathematical formulas for quantum optics research.

Coding

O1 can also help with code optimization, generate test cases, and even assist with code reviews. Its potential doesn’t stop at writing code — it could support project planning, requirements analysis, and software design, streamlining developers’ workflows and increasing productivity.

As OpenAI continues to refine the model, o1 will likely become an invaluable tool for developers, revolutionizing how we approach software development.

Mathematics

In math, o1’s reasoning skills can be used to solve complex equations, prove theorems, and explore new mathematical ideas, benefiting both students and researchers.

Reasoning-Heavy Applications

O1’s focus on reasoning makes it useful for any task that requires critical thinking. Whether it’s solving puzzles, analyzing complex arguments, or assisting in decision-making, o1 opens up new ways of problem-solving.

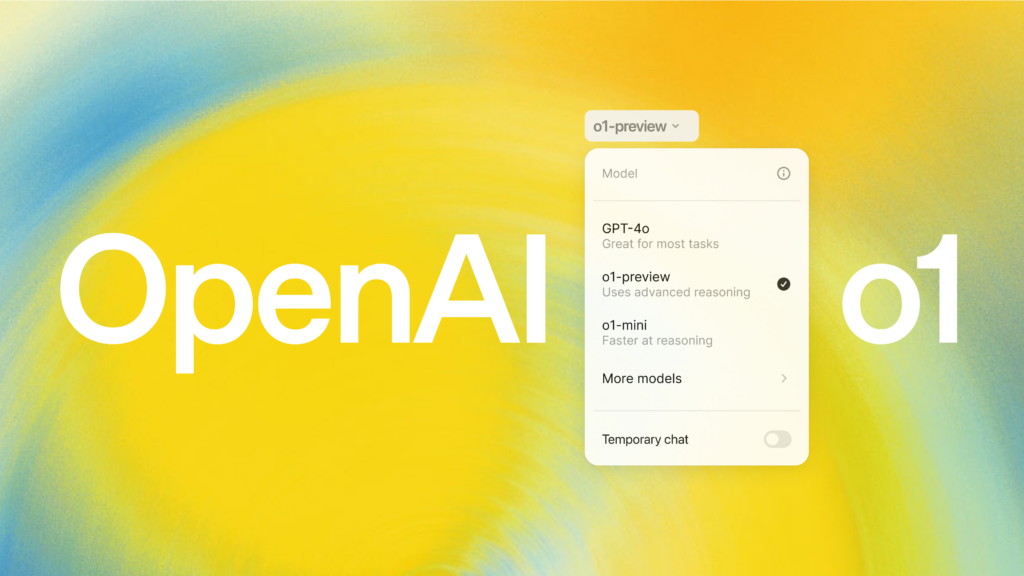

Accessing OpenAI o1

If you’re subscribed to ChatGPT Plus or ChatGPT Team, you can access the o1-preview model within the ChatGPT interface. Simply select o1-preview from the dropdown menu at the top.

It’s important to note that o1-preview is currently in its beta phase, meaning there are some usage limits, like 30 messages per week, and certain features (like browsing, file uploads, or image handling) are not yet supported.

OpenAI o1 API

For developers needing more flexibility, OpenAI offers access to the o1-preview and o1-mini models through the API. While the o1-preview tackles complex problems requiring broad knowledge, the o1-mini is a faster, more cost-effective option for coding, math, and science tasks that don’t require as much general knowledge.

Understanding Reasoning Tokens

A unique feature of o1 models is the introduction of “reasoning tokens.” These tokens represent the model’s internal thought process as it works through problems. While not visible through the API, they take up space in the context window and can impact billing.

Prompting Best Practices

When working with o1 models, it’s best to keep prompts simple and direct. Using techniques like few-shot prompting or explicitly instructing the model to “think step by step” can sometimes hinder its performance.

What is OpenAI o1-mini?

Along with the full o1-preview model, OpenAI also released o1-mini, a smaller, faster variant. While it may lack some of the depth of its larger counterpart, it excels in coding, math, and science tasks where speed and efficiency are key.

Limitations of OpenAI o1

While o1 is impressive, there are some limitations to keep in mind. These include hidden chain-of-thought reasoning, the inability to browse the web or upload files and images, and slower response times.

Safety and Future of OpenAI o1

On the safety front, OpenAI has tested o1’s resistance to “jailbreaking” and found significant improvements over GPT-4o. In addition, OpenAI is working with AI Safety Institutes in the U.S. and U.K. to ensure safer deployments of their models.

As for the future, the o-series promises exciting developments, with o1-preview showing impressive early results, particularly in reasoning-heavy tasks. While there are still challenges ahead, the potential of these models to revolutionize fields like software development and scientific research is undeniable.

Conclusion

Although many expected GPT-5, OpenAI surprised us with o1 — a model that prioritizes reasoning skills. Early results from o1-preview show its ability to tackle complex challenges in math, coding, and science. While it’s still in its early stages and faces some challenges, o1 represents a promising step forward in AI reasoning and cognitive capabilities.